-“Common sense always speaks too late.” — Raymond Chandler

A new study about the (in)efficacy of anti-virus software in detecting the latest malware threats is a much-needed reminder that staying safe online is more about using your head than finding the right mix or brand of security software.

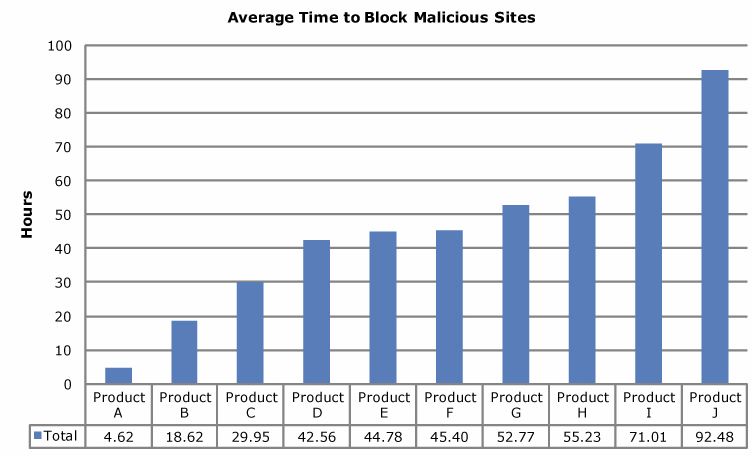

Last week, security software testing firm NSS Labs completed another controversial test of how the major anti-virus products fared in detecting malware pushed by malicious Web sites: Most of the products took an average of more than 45 hours — nearly two days — to detect the latest threats.

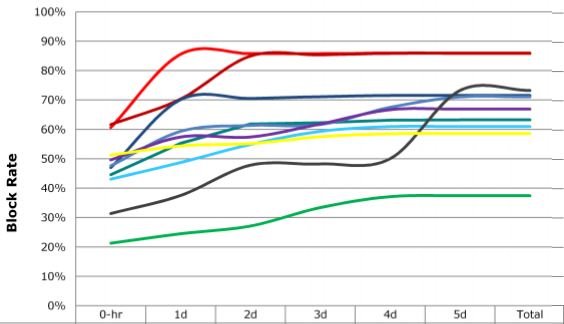

The two graphs below show the performance of the commercial versions of 10 top anti-virus products. NSS permitted the publication of these graphics without the legend showing how to track the performance of each product, in part because they are selling this information, but also because — as NSS President Rick Moy told me — they don’t want to become an advertisement for any one anti-virus company.

That’s fine with me because my feeling is that while products that come out on top in these tests may change from month to month, the basic takeaway for users should not: If you’re depending on your anti-virus product to save you from an ill-advised decision — such as opening an attachment in an e-mail you weren’t expecting, installing random video codecs from third-party sites, or downloading executable files from peer-to-peer file sharing networks — you’re playing Russian Roulette with your computer.

Some in the anti-virus industry have taken issue with NSS’s tests because the company refuses to show whether it is adhering to emerging industry standards for testing security products. The Anti-Malware Testing Standards Organization (AMTSO), a cantankerous coalition of security companies, anti-virus vendors and researchers, have cobbled together a series of best practices designed to set baseline methods for ranking the effectiveness of security software. The guidelines are meant in part to eliminate biases in testing, such as regional differences in anti-virus products and the relative age of the malware threats that they detect.

NSS was a member of the AMTSO until last fall, when the company parted ways with the group. NSS’s Moy said the standards focus on fairness to the anti-virus vendors at the expense of showing how well these products actually perform in real world tests.

“We test at zero hour, and we have a huge funnel where we subject all of the [anti-virus] vendors to the same malicious URLs at the same time,” Moy said. “Generally, the other industry tests are testing days weeks and months after malware samples have been on the Internet.”

David Harley, an AMTSO board member and director of malware intelligence for NOD32 maker ESET, didn’t quibble with the core findings in the NSS report, but rather what he called the lack of transparency in NSS’s testing methodology.

“My quarrel with NSS is that they’re trying to quantify that Product A is better than Product B on the basis of an uncertain methodology,” Harley said. “I’m not quarreling with the proposition that the industry misses a lot of malware. That’s incontrovertible, when every day we’re dealing with close to 100,000 new malware samples. In fact, that sort of level of detection that NSS is talking about — 50 to 60 percent right out of the gate — sounds realistic to me.”

For all of its hand-wringing about results from outside testing firms, the anti-virus testing labs are starting to move in the direction of more real-time testing, said Alfred Huger, vice president of engineering at upstart anti-virus firm Immunet.

“People have to understand that anti-virus is more like a seatbelt than an armored car: It might help you in an accident, but it might not,” Huger said. “There are some things you can do to make sure you don’t get into an accident in the first place, and those are the places to focus, because things get dicey real quick when today’s malware gets past the outside defenses and onto the desktop.”